Large-language models (LLMs) can sound like domain experts until they confidently serve up an answer that’s out-of-date or flat-out wrong.

The culprit is simple: model weights are frozen at training time, while your business keeps changing. Without access to live contracts, the latest prices, or last night’s support tickets, an LLM must guess; and that’s how you get hallucinations, stale facts, and zero awareness of company policies.

Retrieval-Augmented Generation (RAG) plugs that gap by letting the model pull in fresh, authoritative material; think PDFs, SharePoint wikis, or email threads; before it writes a word. The result is grounded, context-aware answers instead of educated guesses.

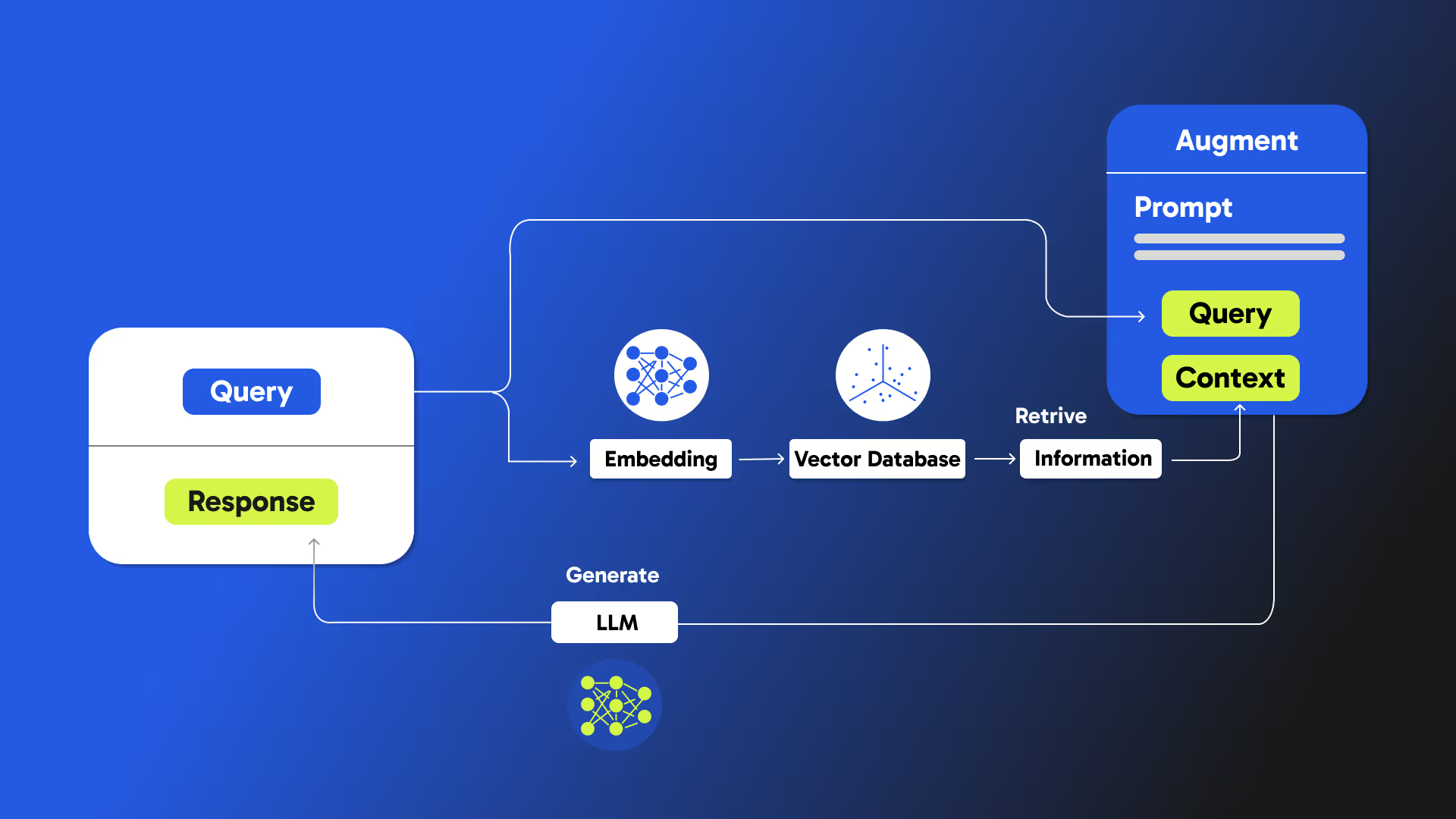

What is RAG? (Retrieval-Augmented Generation)

Together, these four steps bridge the gulf between a model’s general knowledge and your business-specific truth.

Why Most Companies Struggle With RAG

Poor Chunking – Naïve page or sentence splits break tables, clauses, and list items, so key context never makes it to the prompt.

Generic Embeddings – A single “interest” vector blurs bank interest with hobby interest when embeddings aren’t domain-tuned.

Retrieval Trade-offs – Crank up precision and you miss edge cases; crank up recall and you drown the prompt in noise.

Prompt Overload – Dumping every retrieved chunk pushes past token limits and re-introduces hallucinations.

Reality check: 62 % of US finance teams already use AI, but only 35 % have Gen AI embedded into live workflows—most models still lack real-time data feeds.(kpmg.com, kpmg.com)

How Nurix Has Made RAG Enterprise-Ready

Smarter Chunking

We split documents in a way that keeps important structures like tables, bullet points, and policy sections together—especially useful for compliance and legal files.

Domain-Tuned Embeddings

Our AI understands that the same word can mean different things in different industries. Whether you're in finance, energy, or SaaS, we tailor the AI to interpret your language the right way.

Hybrid Retrieval

We use both keyword-based and meaning-based search to find the right answers—whether you're searching for a specific ID or a concept. Then we combine and re-rank them for the best results.

Automatic Query Breakdown

When someone asks a vague question like "Can I expense lunch?", our system breaks it down into more specific sub-questions—like policy limits and approval rules—to find the most accurate answer.

Re-Ranking for Relevance

We prioritize the most relevant answers so the best one always comes first, not buried halfway down.

Security-Grade Pipeline

We’ve built secure systems that keep your data safe even during search. Ensuring sensitive information never leaves trusted environments.

Key Takeaway

RAG is not a checkbox—it’s an architectural commitment to truthful, real-time AI. To work at enterprise scale you need:

- Meaning-based chunking

- Domain-specific embeddings

- Hybrid retrieval + smart re-ranking

- Prompt engineering that trims fat, not facts

- Secure, federated pipelines for sensitive data

Nurix weaves all of the above into a single platform, transforming raw PDFs and messy ticket logs into precision, performance, and trust—so your LLM answers like it actually works at your company.

FAQ

Q1. Does RAG replace fine-tuning?

No. Fine-tuning teaches a model new skills, whereas RAG supplies fresh knowledge at inference time. Many enterprises use both: fine-tune for tone or style, then RAG for up-to-date facts.

Q2. How fast are responses with Nurix’s RAG?

Typical end-to-end latency (query → answer) is under 1 second for <2 KB prompts on a mid-size LLM running in a GPU-backed container. Retrieval is parallelised and cached to stay snappy even at scale.

Q3. What data sources can I plug in?

Anything that can be turned into text: PDFs, DOCX, HTML, Jira tickets, Confluence pages, SQL extracts—even email archives. Connectors stream new content into the index continuously.

Q4. How does Nurix keep my data secure?

All chunks are encrypted at rest, retrieval runs inside confidential-computing enclaves, and optional federated RAG keeps data inside your VPC—no central copy required (see C-FedRAG paper).(arxiv.org)

Q5. What’s the typical lift in answer accuracy?

In customer pilots, moving from vanilla chat-only bots to Nurix RAG cut hallucinations by 30-40 % on policy Q&A and boosted “fully correct” answers from 62 % to 88 %. Results vary by domain but trend similarly.

Q6. Do I need a data-science team to maintain this?

No. Nurix ships with auto-tuning for chunk size, embedding refresh, and retrieval thresholds. Your data team can tweak settings, but default configs are production-ready.

.avif)