We’ve all encountered those automated phone calls—whether it's a sales pitch or a reminder to pay a bill. These interactions are powered by voice agents programmed to follow rigid scripts and respond to a narrow set of commands. This often leads to a frustrating customer experience, as these systems struggle with managing complex queries, understanding human emotions, or even listening effectively to customers.

Problems with Traditional Voice Agents:

- Limited by rigid scripts: Traditional voice agents are confined to a set of pre-programmed responses, making it hard to handle unexpected or nuanced customer queries.

- Inability to manage complex queries: When faced with complex or multi-step requests, these systems often fail, leading to user frustration.

- Lack of emotional understanding: Traditional voice agents cannot detect or respond to human emotions, which are critical in customer interactions.

But what if we could create an advanced conversational AI that engages in more natural and intuitive dialogues? Imagine an AI that detects nuances in speech, interprets varying accents, and even recognizes individual user preferences over time, making each interaction more personalized and effective.

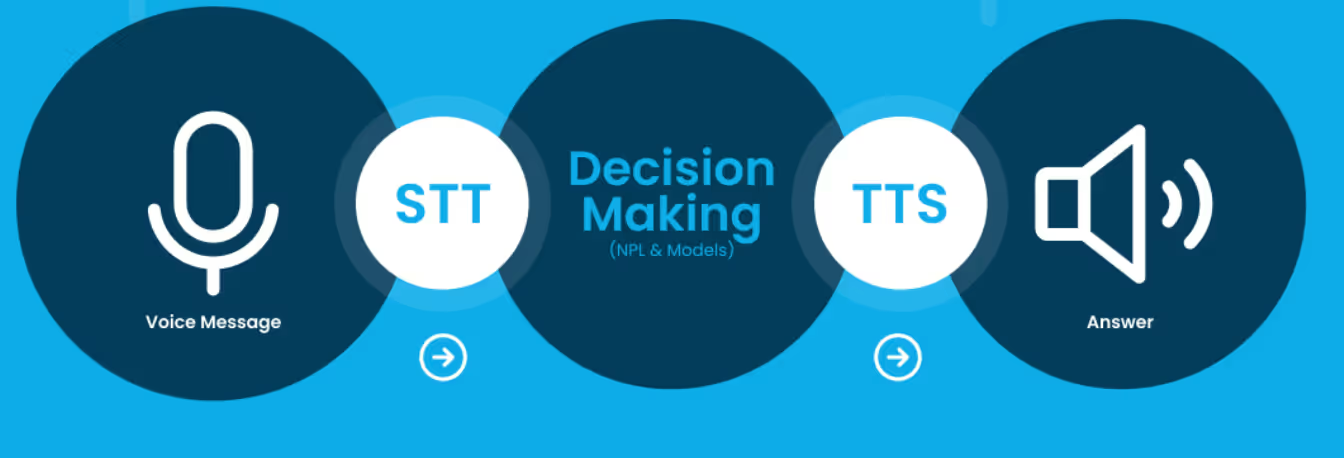

One of our core areas of focus is building models that can create such AI conversational partners and agents. A straightforward but naive approach to this involves stitching together multiple models:

- Speech-to-Text Conversion: Using Automatic Speech Recognition (ASR) models like OpenAI’s Whisper to convert customer voice into text.

- Language Processing: Feeding this text to a language model like LLaMA or GPT-4 to generate a reply.

- Text-to-Speech Conversion: Converting the generated text back to speech using Text-to-Speech (TTS) models.

While there have been significant advancements in each of these individual model types, this approach has several drawbacks:

- Loss of Nuance: When generating text using ASR models, many audio nuances—such as emotion, pauses, or non-verbal sounds—are lost.

- Integration Challenges: Integrating these models, especially with a focus on low latency, is a significant challenge, often resulting in delays in generating a reply.

- Pause and Interruption Management: Managing pauses and interruptions is complex; current systems often either shut down completely or fail to address the interruption properly.

Challenges in Traditional Model Integration:

- Inability to capture emotion, tones, etc.

- High latency

- Difficulty in managing interruptions and pauses

However, recent advancements are enabling us to move beyond this piecemeal approach. Instead of stitching together separate models, we can now use truly multimodal language models that handle both audio and text as inputs and outputs, such as GPT-4o.

The Case for a Unified Model

The motivation behind using a single model for both audio and text is clear: it allows for a more seamless understanding and processing of information across these modalities. A unified model can capture concepts that are difficult to express in text alone. For instance:

- Describing the sound of a house under construction is far less effective than providing the actual audio.

- Differentiating between the sounds of a malfunctioning engine is more straightforward when the model can analyze the audio directly.

With such a model, the possibilities for interaction are endless. Imagine asking the model, "Hey, tell me which bird this is..." and then playing a bird's sound. The model could identify the bird or even mimic a favorite cartoon character's voice for a birthday song.

The Role of Audio Tokenization

To enable a transformer-based language model to accept and process audio as input, the first step is tokenizing the audio. Audio tokenization differs significantly from text tokenization due to the nature of audio signals:

- Audio is Analog: Unlike text, which is discrete, audio is an analog signal that cannot be easily segmented and tokenized.

- Speaker Variability: The same content can sound vastly different depending on the speaker, adding another layer of complexity.

- Information Density: Audio is far less information-dense than text. For example, a single second of audio can equate to 16,000 tokens, while two words might only be 4-6 tokens.

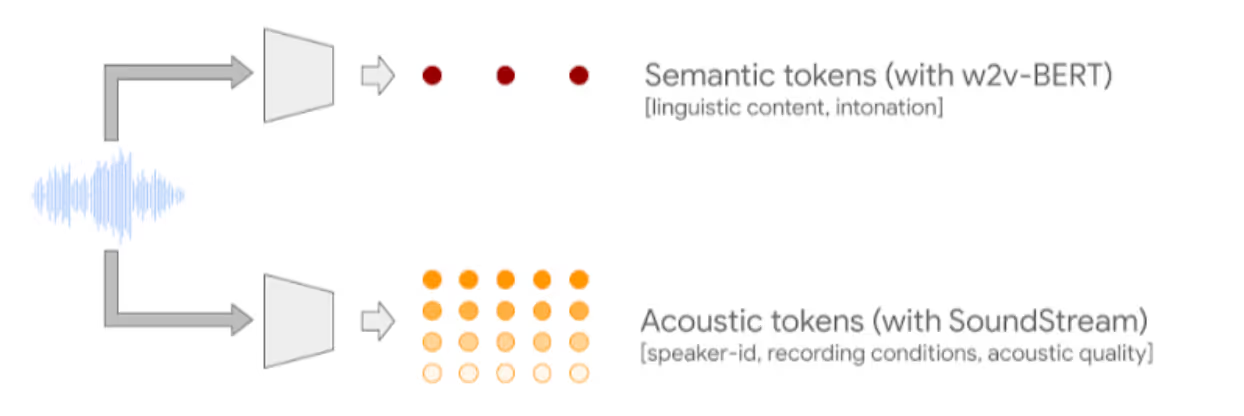

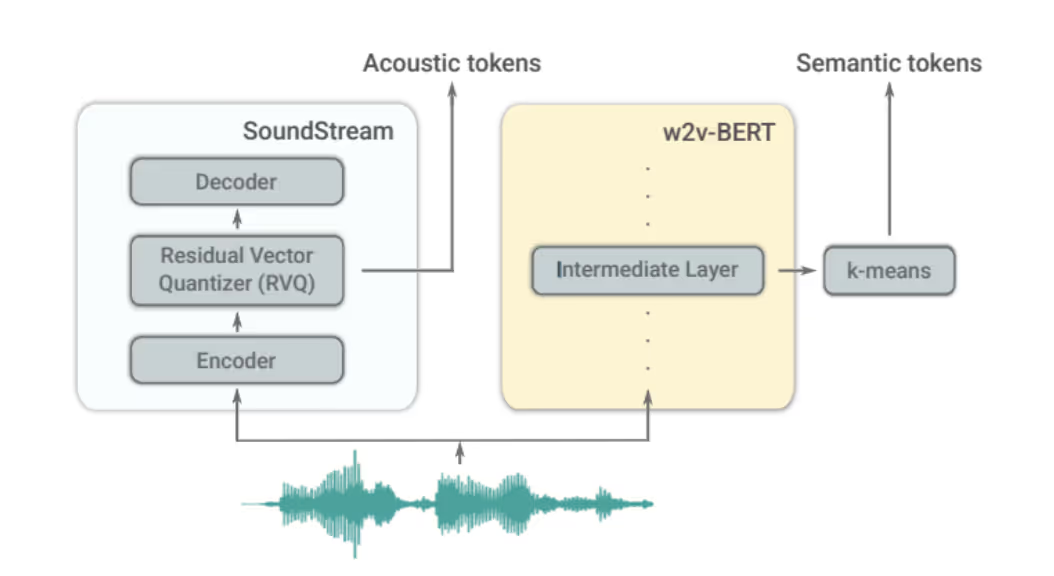

Given these challenges, audio is tokenized using two distinct methods to capture both how words are spoken and how they are read:

- Acoustic Tokens: These tokens are designed for audio compression and preserve speaker traits, prosodic features, and phonetic information. They retain details such as the speaker's voice, accents, and tone. Examples include Google's SoundStream and Facebook's Encodec.

- Semantic Tokens: These tokens preserve the way words are "read," enabling long-term modeling that transformers excel at. Regardless of who is speaking, the semantic content remains consistent. Examples include HuBERT and wav2vec BERT.

In both methods, embeddings from a model's middle layers are discretized into tokens using a codebook—a finite set of embeddings trained alongside the neural networks mentioned above.

Audio Tokenization Methods:

- Acoustic Tokens: Preserve how words are spoken (e.g., accents, tone).

- Semantic Tokens: Preserve how words are read (e.g., consistent meaning across speakers).

These tokens are crucial in the functioning of the audio language model we are developing. Tokenizing audio is just the first step in creating an advanced audio language model, and it opens the door to numerous possibilities in conversational AI.

This journey is just beginning, and we look forward to exploring more aspects of audio language models in future discussions.