Introduction: What Does Voice AI Really Mean?

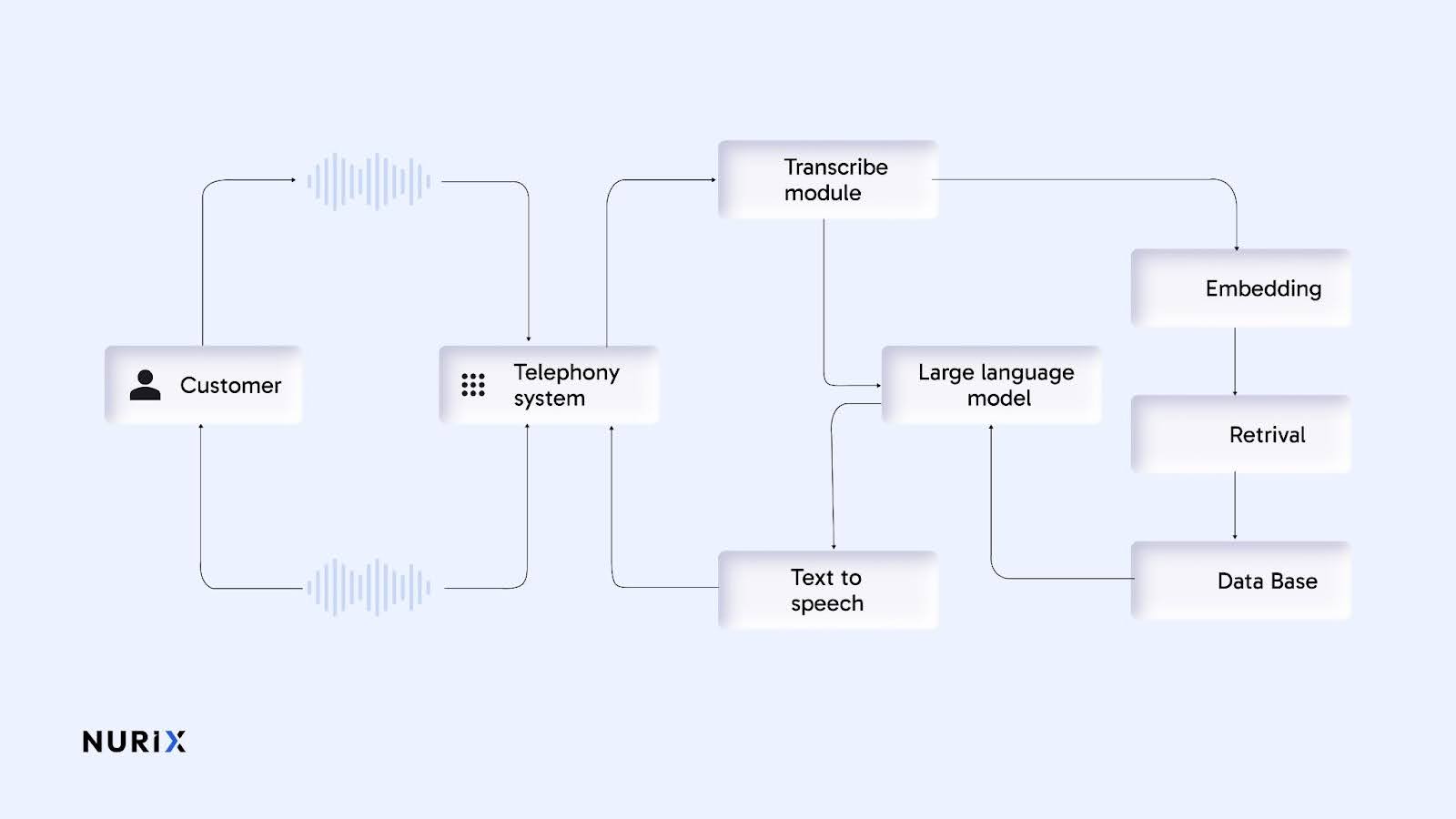

You hear “voice AI” everywhere. Most people picture a bot that converts speech to text and reads a reply back. That is a start, not the destination. The destination is a natural conversation that helps you get something done. You speak in your normal style. The agent understands intent, asks a quick follow up when needed, and completes the task without a maze of options.

Here is a simple way to think about it. If speech-to-text is the ear, and text-to-speech is the mouth, then real value lives in the brain and the heart of the system. The brain carries context from earlier turns and earlier calls. The heart uses tone and pacing that fit the moment. When these parts work together, the experience feels fluid. It feels like talking to a skilled teammate who respects your time.

In India this matters even more. People shift between English and local languages in the same sentence. Accents vary across regions. Terms change across industries. A good agent adapts without asking you to slow down or change how you speak. That is where voice AI becomes more than a demo. It becomes daily infrastructure.

What Does “Human-Like Voice AI” Really Mean?

Let us separate two ideas. Human-sounding and human-feeling. Human-sounding is about audio quality. It is clear speech with good pronunciation. It does not crack or buzz. Human-feeling is deeper. It is about how the agent behaves when you pause, when you correct yourself, or when you go off on a short tangent before returning to the point.

People often assume a perfect voice will feel more human. In practice, the opposite can happen. If the voice is always crisp and the timing is always identical, it can sound staged. Real conversation is slightly irregular. You hear small acknowledgments like “hmm” or “got it.” You notice that the reply sometimes starts a moment after you finish, not instantly, because the agent is letting your last word land. These small details build trust.

Think about a call where you explain a complex issue. A human agent takes a breath, repeats a key detail to confirm, and then acts. That beat creates confidence. A human-like voice AI tries to replicate that beat. Not to pretend to be human. To make you feel heard and respected so the task flows.

The Three Pillars That Make Conversations Feel Natural

Context Awareness

Context is the difference between a transaction and a relationship. If you called last week to ask about an order, you should not have to repeat the order number today. If you prefer Hindi for billing questions but English for technical support, the agent should follow your lead without a setting hunt. Context awareness means the system remembers what matters and uses it at the right time.

There are two layers here. First, within a single call, the agent should keep track of entities, preferences, and prior steps. If you say, “Change the address to the office one,” it should know which address you mean because you mentioned it a minute ago. Second, across calls, the agent should recall important facts with consent and policy. You should be able to say, “Pick up where we left off,” and it does.

A quick example. A customer in Bengaluru calls about a broadband issue. Last week they were asked to try a new router setting. Today the agent should begin with a quick check. “Last time you switched the router channel. Is the signal stable now, or should I schedule a field visit.” That is context doing real work. Fewer steps. Less frustration.

Listening Style

Listening style is about how the agent behaves while you speak. Humans use backchannels like “okay,” “right,” and “hmm.” These are not filler words. They signal attention. They help the speaker pace the story. A good agent uses light backchannels at the right moments. It does not interrupt mid-word. It does not jump in because it heard a keyword too early.

Turn-taking matters. People leave micro-pauses that signal “your turn.” A respectful agent waits for that gap, then responds. It also knows when to ask a quick clarifying question. For example, you say, “I want to change my plan to the family one.” The agent confirms the plan name and the start date. Short. Clear. No over-talking.

In multilingual India, listening style includes code-switching. A caller might say, “Recharge karna hai for three months.” The agent should detect intent without forcing the user to translate their own sentence. It can repeat back the key detail in the language the caller used last. “Three months recharge. Shall I proceed.” The tone remains friendly and efficient.

Natural Pacing

Pacing is the rhythm of the call. You feel it when the agent slows down for a sensitive step like a payment. You feel it when it speeds up for a routine status check. Natural pacing builds comfort because it matches the weight of the moment.

Silent gaps are not failures. They are part of conversation. A short pause after you ask a tough question gives you space to think. It also gives the agent a chance to compute a precise answer instead of rushing a generic one. The trick is balance. Too many pauses feel slow. Too few feel pushy. The right pacing helps the call feel fair and calm.

Small talk can also help when used well. A quick “Hope the festival week went well” during Diwali can feel warm in India. The agent should keep it short and relevant. It returns to the task without drifting. That mix of warmth and focus is where human-like conversation lives.

Try the Difference Yourself with Nurix Voice AI

You can feel these ideas more than you can read them. Try a live agent. Start talking before it finishes a sentence and see what happens. A strong system listens while you speak. It does not panic. It adjusts and responds at the right time. Ask two questions in a row. Then switch topics for a moment and come back. A strong system will not lose the thread.

Speed matters. Low latency helps the entire experience feel alive. You should not wait several seconds after every sentence. You should hear an acknowledgment or see progress quickly. Memory matters as well. When you return after lunch, the agent should still know the order you were tracking if you choose to allow that.

If you want to test this in your environment, pick one call type. For example, let the agent handle delivery status queries. Watch how people talk. Notice the places where context, listening, and pacing remove friction. That is where business value shows up first.

Use Cases: Scale Your Top 1% Agent to Everyone

Every company has a few agents who are excellent. They remember context. They stay calm under pressure. They use simple language and confirm key details. Human-like voice AI lets you scale those habits across thousands of calls.

Start with high-volume, high-variance tasks. Support and troubleshooting are clear candidates. For example, “My internet is slow at night.” A capable agent asks three short questions. It checks line quality, recent outages, and router settings. It then suggests a fix or schedules a visit. Each step uses context from the current call and from past interactions with permission.

Order status and returns are a natural next step. People want straight answers and clear options. The agent should pull data from the right system and share only what is needed. “Your order left the hub this morning. It is due tomorrow before 6 pm. I can set an SMS alert for delivery.” Short, accurate, done.

Onboarding and collections can benefit as well. In onboarding, the agent confirms identity, collects a few documents, and schedules follow-ups. In collections, the agent uses a steady tone and offers realistic paths. It might say, “You can pay today, or you can set two split payments next week. Which works.” The style is firm and respectful.

Scheduling often becomes a bottleneck for teams. A voice agent that knows your working hours, holidays, and travel rules can propose slots that fit constraints. In India, it can handle names and locations that vary in spelling without constant repeats. “Shall I book the cab pickup at 9 am from Indiranagar. The drop is Manyata Tech Park.”

The goal is not to replace human agents. It is to let them focus on hard cases that need empathy and judgment. The AI handles repetitive calls with consistent quality. Your best practices spread to every interaction. The customer experience becomes more stable. Your team’s workload becomes healthier.

The Future of Human-Like Voice AI

We are leaving behind rigid IVR menus and script trees. The future is real-time, emotionally aware agents that can reason across systems. Low latency will be expected, not special. Sub-second acknowledgments will become normal for well-designed tasks. Context memory across sessions will feel like table stakes for brands that want repeat business.

Tone and sentiment understanding will guide the agent’s style. If a caller sounds frustrated, the agent slows down and confirms more. If the caller sounds rushed, the agent shortens replies and gets to the outcome. Sentiment analysis is not mind-reading. It is pattern detection across words and vocal cues like pitch and energy. It needs guardrails and human review for quality and fairness.

Transparency will be a core feature, not an afterthought. Teams will review transcripts, highlights, and tone cues to improve journeys. You will see which steps cause drop-offs. You will identify moments where a different prompt or a different policy would help. Analytics will connect quality to business outcomes like resolution time and repeat contact rate.

Another important shift is language coverage. India has a rich language landscape. Agents will need to handle dialects, accents, and code-switching without pushing users to change. Community efforts like Common Voice are expanding speech data for diverse speakers. You can read about that here. Government-led programs are also working to bridge language gaps. The Bhashini initiative is a good example. You can learn more here. These efforts help the ecosystem build tools that respect how people actually speak.

Privacy and control will shape trust. Companies should set clear retention windows. They should redact sensitive information in real time. They should make it easy for users to say what can be remembered and for how long. This is not just compliance. It is how you earn the right to keep improving the experience.

Finally, multi-agent systems will quietly power many of these improvements. One agent listens and structures the intent. Another pulls data from the right system. A third chooses the right tone for the reply. You do not see that choreography on the surface. You just feel the result. The call flows. The outcome arrives.

Closing CTA

If you want to explore human-like voice AI, pick one use case and measure it. Aim for natural talk time, quick acknowledgments, and clean handoffs to humans when needed. Give the agent a small set of tasks and a clear policy on memory and privacy. Improve weekly with transcripts and feedback. Then widen the scope with confidence.

Contact Us to know more.