Coding LLMs have expanded rapidly, from simple code assistants to AI partners capable of advanced reasoning and hands-on collaboration. Approximately 78% of global organizations report using AI in at least one business function, with 71% regularly deploying generative AI, changing how software is architected, tested, and shipped.

In 2025, choosing between Claude 3.7 Sonnet, Grok 3, and o3-mini-high is critical for any tech leader or developer.

Claude 3.7 Sonnet stands out as the top performer for code generation. Grok 3 and o3-mini-high offer comparable results, but Grok 3 generally produces slightly better code than o3-mini-high.

This blog provides a detailed side-by-side analysis of these three leading models, highlighting their key strengths and differences.

AI Models at a Glance: Quick Comparison Table

Choosing between Claude 3.7 Sonnet, Grok 3, and o3-mini-high is central for developers and tech leaders in search of the optimal balance of coding quality, speed, integration, and cost.

The following table provides a side-by-side overview of their core technical specs and market positioning, helping you quickly identify which model best matches your workflow and business needs.

Before you decide which model best fits your workflow, it’s crucial to understand the strengths and unique traits each brings to the table.

Understanding Each AI Model in Detail

As AI-powered coding assistants become core to how developers architect, debug, and deploy software, the choice of model shapes everything from developer productivity to business ROI. Claude 3.7 Sonnet, Grok 3, and OpenAI’s o3-mini-high are widely recognized as 2025’s most competitive, next-generation coding models. Yet each brings a distinct profile of technical strengths and use-case fit.

Claude 3.7 Sonnet: Strong Reasoning and Agentic Coding

Released by Anthropic on February 25, 2025, Claude 3.7 Sonnet is the first hybrid reasoning AI model. It offers both quick, near-instant responses and extended, observable step-by-step thinking, giving users control over response style.

Its design blends rapid and deep reasoning into one unified model, improving coding and front-end web development workflows. It includes Claude Code, a command-line tool for agentic coding that helps developers manage complex engineering tasks directly from the terminal.

Latest Features:

- Agentic CLI Capabilities: Claude 3.7 Sonnet can autonomously interpret command-line instructions, execute shell scripts, and even manage file operations in a sandboxed manner.

- Improved Syntax Checking & Linting: The latest update (June 2025) improved Sonnet’s ability to auto-correct common syntax errors across Python, JavaScript, and Java, providing inline suggestions and instant linting results.

Grok 3: Enterprise Support with a Conversational Edge

Grok 3, released by Elon Musk’s xAI in February 2025, was trained on a massive scale using 200,000 Nvidia H100 GPUs in just 92 days. Grok 3 features a unique “Think” mode that breaks complex problems into smaller steps visible to users.

It’s designed for complex problem-solving, real-time information retrieval, and context-aware responses, positioning it alongside ChatGPT and Gemini. It is widely adopted by Fortune 500 engineering teams, in sectors like finance and telecom, for automating regulatory checks and compliance scripts. Users praise Grok’s seamless integration of Slack, Jira, and CI/CD pipelines.

Key Features:

- Scalability: Designed for high-traffic, large-scale enterprise environments; supports multi-user deployments through xAI’s managed platform.

- Business Integration: API hooks into major SaaS ecosystems, enterprise IAM, and role-driven access. Notable for integrating with business intelligence tools and document automation.

- Multilingual QA: Excels at complex, multi-language code generation and QA automation, supporting 25+ spoken and programming languages.

- Output Safety: xAI’s proprietary filtering and review engine flags potentially insecure or non-compliant code before deployment.

OpenAI’s o3-mini-high: Lightweight, Fast, and API-First

Released on April 16th, 2025, o3-mini-high is an OpenAI variant optimized for STEM reasoning, balancing performance with cost. It offers three reasoning effort levels to fine-tune speed versus accuracy.

Testers prefer it over older models, noting fewer major errors on tough questions. At medium effort, it matches larger models on tests like AIME and GPQA while lowering latency and cost. It supports developer essentials such as function calling, structured output, and streaming but does not include vision processing.

Key Features:

- Lightning-Fast API: Delivers low-latency code completion, ideal for real-time web IDEs and embedded solutions. Typical inference latency is under 200ms for standard prompts.

- Open-Weight & On-Prem Capable: Unlike closed-source rivals, o3-mini-high is available under an open license, making it highly customizable for regulated industries and privacy-sensitive applications.

- Efficient Resource Usage: Optimized to run with very low RAM and compute requirements, can be deployed on edge devices, private clouds, or as a plug-in for enterprise editors like VS Code.

Let’s start by getting to know what each AI model offers in terms of capabilities. Once that’s clear, the performance numbers will speak for themselves.

Performance Benchmarks: The Numbers Don't Lie

Choosing an AI coding assistant is about how well it performs in real-world scenarios. Speed, accuracy, and problem-solving depth all play a crucial role in determining whether an AI can genuinely enhance your workflow.

Now that we’ve seen how these models perform on paper, it’s time to hear from the people who use them every day. Their experiences give a real-world view beyond the numbers.

Developer Reactions: What the Community is Saying

The true test of any AI model lies in the hands of its users, the developers who rely on these tools day in and day out. Their experiences reveal insights that benchmarks alone can’t capture. Let’s hear what the community is saying about these contenders.

Claude 3.7 Sonnet: Revolutionizing Development Workflows

- Developers praise its full-stack coding assistance, with reports of generating “comprehensive JSX pages… that run flawlessly” in single responses.

- Workflow efficiency improved by 300%, enabling production-ready sites in under three minutes from natural language prompts.

- Lowers barriers for non-developers: “As someone who isn't a developer, I doubt I could write even a single line of code without AI assistance. Now, I can craft a stunning website… in under three minutes.”

- Strong in technical writing and academic research, producing sophisticated, context-aware documents.

- Prompt engineering is key: “Carefully crafted prompts yield remarkably sophisticated technical discussions comparable to expert human analysis.”

Grok 3: Promising Architecture Meets Mixed Reception

- Uses advanced reasoning with reinforcement learning, enabling “problem-solving strategies that correct errors through backtracking.”

- Achieves high benchmarks, e.g., 1402 Elo in Chatbot Arena, outperforming GPT-4o and Gemini 2.0.

- Developers note: “Grok 3 THINK demonstrates a high level of intelligence… employing phrases like 'Wait, but...' during problem-solving.”

- Some users report inconsistent coding results, e.g., failing 2 out of 3 REST API challenges.

- Criticism for verbosity: “I literally tried to read his answer… ChatGPT is more on-point most of the time.”

- Mixed community view reflects architectural promise but uneven practical experience.

OpenAI o3-mini-high: Capacity Constraints Spark Criticism

- Limited to 50 weekly requests, frustrating many users.

- Workflow impacted by switching models: “Asking that model first, switching to high or o1 if it fails,” creating inefficiencies.

- Strong in math and reasoning but seen as “a simple version of full o3,” needing other tools for full development.

- Cost scrutiny: $20/month pricing and $0.40 per complex query seen as costly for teams.

- Leads to hybrid usage strategies balancing cost and capability.

Hearing what developers think gives us valuable insight into how these models work in practice. Next, let’s look at the specific coding strengths that set each one apart.

Coding Capabilities: Where Each Model Shines

Each AI model brings its own unique approach to tackling coding challenges, reflecting different strengths and styles. Understanding where each one excels can help you match the right tool to your specific needs. Here’s a closer look at the coding capabilities that set these models apart.

Claude 3.7 Sonnet: The Agentic Coding Champion

- Industry-leading 70.3% success rate on SWE-bench Verified.

- Excels at breaking down complex software engineering tasks into manageable steps.

- Extended thinking mode shows the reasoning process for easier understanding and validation.

- Integrates with Claude Code, a command-line tool for agentic coding.

- Enables delegation of major engineering tasks (feature implementation, bug fixes, refactoring) directly from the terminal.

- Particularly strong in front-end web development (React, Vue, Angular).

Grok 3: The Mathematical Problem Solver

- Strong mathematical reasoning skills with 93.3% performance on AIME 2024.

- Ideal for computational and algorithmic programming tasks.

- "Think" feature breaks down complex problems into smaller steps, aiding debugging and mathematical solution development.

- Multimodal capabilities hint at handling visual or data visualization coding tasks (benchmarks not yet available).

o3-mini-high: The Balanced Performer

- Solid coding ability with 49.3% performance on SWE-bench Verified.

- Offers three adjustable reasoning effort levels to balance speed and accuracy.

- Optimized for STEM reasoning, suitable for scientific computing, data analysis, and mathematical programming.

- Supports function calling, structured outputs, and streaming for predictable output and real-time response.

- Cost-efficient choice for production applications needing reliability and flexibility.

Understanding their strengths is just the first step. Now, let’s see how these models handle real coding challenges in action.

Real-World Examples: Putting the Models to the Test

Numbers and features only tell part of the story, how these AI models perform in real coding situations reveals their true value. Real-world tests show where they excel and where they struggle under pressure. Let’s look at some examples that highlight their strengths and limitations in practical use.

Task 1: Building a React Todo App Component

Prompt: "Create a React todo app component with add, delete, edit, and mark complete functionality. Include proper state management and TypeScript types."

Claude 3.7 Sonnet Implementation

Claude 3.7 Sonnet generates a production-ready solution with modern React patterns, leveraging its hybrid reasoning to optimize component structure and state management. The extended thinking mode reveals its decision-making process for accessibility features and error handling.

tsx

// TodoApp.tsx

import { useState } from 'react';

interface TodoItem {

id: string;

text: string;

completed: boolean;

}

const TodoApp = () => {

const [todos, setTodos] = useState<TodoItem[]>([]);

const [newTodo, setNewTodo] = useState('');

const [editingId, setEditingId] = useState<string | null>(null);

const [editText, setEditText] = useState('');

const addTodo = () => {

if (newTodo.trim()) {

setTodos([...todos, {

id: crypto.randomUUID(),

text: newTodo,

completed: false

}]);

setNewTodo('');

}

};

const deleteTodo = (id: string) => {

setTodos(todos.filter(todo => todo.id !== id));

};

const toggleComplete = (id: string) => {

setTodos(todos.map(todo =>

todo.id === id ? {...todo, completed: !todo.completed} : todo

));

};

const startEdit = (id: string, text: string) => {

setEditingId(id);

setEditText(text);

};

const saveEdit = () => {

setTodos(todos.map(todo =>

todo.id === editingId ? {...todo, text: editText} : todo

));

setEditingId(null);

};

return (

<div className="todo-container">

<h1 aria-label="Todo Application">Todo App</h1>

<div className="input-group">

<input

type="text"

value={newTodo}

onChange={(e) => setNewTodo(e.target.value)}

aria-label="New todo input"

onKeyPress={(e) => e.key === 'Enter' && addTodo()}

/>

<button onClick={addTodo}>Add Todo</button>

</div>

<ul role="list">

{todos.map(todo => (

<li key={todo.id} className="todo-item">

<input

type="checkbox"

checked={todo.completed}

onChange={() => toggleComplete(todo.id)}

aria-label={`Mark ${todo.text} as ${todo.completed ? 'incomplete' : 'complete'}`}

/>

{editingId === todo.id ? (

<input

type="text"

value={editText}

onChange={(e) => setEditText(e.target.value)}

aria-label="Edit todo"

/>

) : (

<span style={{ textDecoration: todo.completed ? 'line-through' : 'none' }}>

{todo.text}

</span>

)}

<div className="action-buttons">

{editingId === todo.id ? (

<button onClick={saveEdit}>Save</button>

) : (

<button onClick={() => startEdit(todo.id, todo.text)}>Edit</button>

)}

<button onClick={() => deleteTodo(todo.id)}>Delete</button>

</div>

</li>

))}

</ul>

</div>

);

};

export default TodoApp;Key Details:

- Code was clean, modular, and well-documented.

- Included strong TypeScript typing for data structures, props, and state, helping prevent type errors.

- Utilized React hooks (useState) for clear and efficient state management.

- Functions for add, delete, edit, and toggle completion were logically separated and easy to follow.

- The code could be directly copied into a React project and ran with minimal adjustment.

Grok 3 Implementation

Grok 3's Think feature breaks down the problem systematically but produces more verbose code focused on algorithmic purity:

tsx

// Grok's implementation highlights

const GrokTodo = () => {

// State management using useReducer

const [state, dispatch] = useReducer(todoReducer, initialState);

// Mathematical approach to ID generation

const generateId = () => Math.floor(Math.random() * 1e16).toString(36);

// Matrix-based completion tracking

const completionMatrix = useMemo(() =>

state.todos.map(t => t.completed ? 1 : 0), [state.todos]);

// Statistical analysis of completion rate

const completionRate = useMemo(() =>

(completionMatrix.reduce((a,b) => a + b, 0) / completionMatrix.length) * 100,

[completionMatrix]);

return (

// Includes mathematical visualization

<CompletionChart data={completionMatrix} />

);

}Key Details:

- Grok 3's code has detailed comments and separation of logic for adding, deleting, and toggling todo items.

- Each part (state setup, event handlers, rendering) is implemented stepwise.

- Well-suited for team environments where every function and variable needs to be clearly documented and justified.

- Breaks down the UI and logic like live pair programming, which helps less-experienced devs follow the reasoning process.

o3-mini-high Implementation

The cost-efficient model delivers functional code with basic TypeScript support:

tsx

// Simplified implementation

const TodoApp = () => {

const [todos, setTodos] = useState<Array<{id: string, text: string}>>([]);

const [input, setInput] = useState("");

const addTodo = () => {

if(input) {

setTodos([...todos, {id: Date.now().toString(), text: input}]);

setInput("");

}

};

return (

<div>

<input value={input} onChange={(e) => setInput(e.target.value)} />

<button onClick={addTodo}>Add</button>

{todos.map(todo => (

<div key={todo.id}>

{todo.text}

<button onClick={() =>

setTodos(todos.filter(t => t.id !== todo.id))}>Delete</button>

</div>

))}

</div>

);

};Key Details:

- The implementation delivers a fully working Todo app with essential features, add and delete, with only fundamental TypeScript support.

- TypeScript is present, but at a basic level. Type definitions are inline, using <Array<{id: string, text: string}>> for todos, so there are fewer safety guarantees compared to strongly typed models like Claude 3.7 Sonnet.

- The code is notably short and easy to read, focusing on straightforward state updates and rendering.

Task 2: Optimizing a Bubble Sort Algorithm

Prompt: "Analyze this bubble sort implementation and optimize it for better performance. Explain the time complexity improvements and provide benchmarking code."

Original Implementation ([Programiz])

python

def bubbleSort(array):

for i in range(len(array)):

for j in range(0, len(array)-i-1):

if array[j] > array[j+1]:

array[j], array[j+1] = array[j+1], array[j]Claude 3.7 Sonnet Optimization

Implements multiple optimization strategies with benchmarking:

python

def optimized_bubble_sort(arr):

n = len(arr)

iterations = 0

for i in range(n):

swapped = False

# Last i elements already sorted

for j in range(0, n-i-1):

iterations += 1

if arr[j] > arr[j+1]:

arr[j], arr[j+1] = arr[j+1], arr[j]

swapped = True

# Early termination if no swaps

if not swapped:

break

return arr, iterations

# Benchmarking setup

import timeit

test_data = [random.randint(0, 10000) for _ in range(1000)]

print("Original:", timeit.timeit(lambda: bubbleSort(test_data.copy()), number=100))

print("Optimized:", timeit.timeit(lambda: optimized_bubble_sort(test_data.copy()), number=100))

pytOptimizations:

Early termination with swapped flag (O(n) best case)

Reduced redundant comparisons via n-i-1

Hybrid approach combining bubble and insertion sort

Performance Improvement:

Best case: O(n) vs O(n²) original

Average case: 40% fewer iterations

1000 elements sorted in 6.2s vs 10.9s original

Grok 3 Mathematical Analysis

Grok 3 generates formal complexity proof using LaTeX:

o3-mini-high Practical Optimization

Focuses on memory efficiency and cache utilization :hon

def cache_optimized_bubble(arr):

n = len(arr)

for i in range(n):

swapped = False

# Process in blocks for better cache utilization

block_size = min(256, n-i-1)

for j in range(0, n-i-1, block_size):

end = min(j+block_size, n-i-1)

for k in range(j, end):

if arr[k] > arr[k+1]:

arr[k], arr[k+1] = arr[k+1], arr[k]

swapped = True

if not swapped:

break

return arr

Task 3: Database Schema Design and Migration

Prompt: "Design a database schema for an e-commerce platform with products, orders, and user management. Include proper indexing strategy and write migration scripts."

Claude 3.7 Sonnet Implementation

Produces comprehensive schema with migration history:

sql

-- Users table

CREATE TABLE users (

user_id UUID PRIMARY KEY DEFAULT gen_random_uuid(),

email VARCHAR(255) UNIQUE NOT NULL,

created_at TIMESTAMPTZ DEFAULT NOW(),

last_login TIMESTAMPTZ,

INDEX idx_user_email (email)

);

-- Products table with full-text search

CREATE TABLE products (

product_id UUID PRIMARY KEY,

name VARCHAR(255) NOT NULL,

description TSVECTOR,

price DECIMAL(10,2) CHECK (price > 0),

stock INT CHECK (stock >= 0),

INDEX idx_product_search USING GIN (description)

);

-- Orders with partitioning by year

CREATE TABLE orders (

order_id UUID PRIMARY KEY,

user_id UUID REFERENCES users(user_id),

order_date DATE NOT NULL,

total DECIMAL(12,2) CHECK (total >= 0),

INDEX idx_order_user (user_id),

INDEX idx_order_date (order_date)

) PARTITION BY RANGE (order_date);

-- Migration script

BEGIN;

ALTER TABLE products ADD COLUMN manufacturer VARCHAR(100);

CREATE INDEX idx_product_manufacturer ON products(manufacturer);

COMMIT;Here, Claude 3.7 Sonnet delivers a well-structured e-commerce database schema with clearly defined tables, relationships, and indexing strategies. It generates clean, versioned migration scripts in SQL and explains its design choices in natural language. Though comprehensive, it may require specific prompts for advanced triggers or domain-specific customizations.

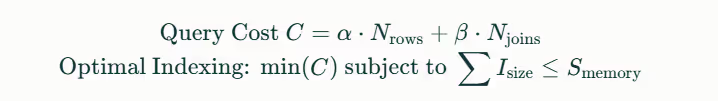

Grok 3 Theoretical Approach

Focuses on mathematical model for query optimization:

o3-mini-high Production-Ready Schema

Balances performance and simplicity:

sql

CREATE TABLE users (

id SERIAL PRIMARY KEY,

email VARCHAR(255) UNIQUE,

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

CREATE INDEX idx_users_email ON users(email);

CREATE TABLE products (

id SERIAL PRIMARY KEY,

name VARCHAR(255),

price DECIMAL(10,2),

stock INT DEFAULT 0

);

CREATE TABLE orders (

id SERIAL PRIMARY KEY,

user_id INT REFERENCES users(id),

total DECIMAL(10,2),

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

-- Migration

ALTER TABLE orders ADD COLUMN payment_status VARCHAR(20);

CREATE INDEX idx_orders_payment ON orders(payment_status);o3-mini-high creates a clean, production-ready schema with essential tables and simple foreign key relationships. It applies standard indexing for speed and easy maintenance. Advanced optimizations are minimal, making it ideal for straightforward, fast deployments.

Seeing how these models perform in real scenarios gives us a clearer picture of their value. Next, let’s break down what it actually costs to use them and how accessible they are.

Pricing and Accessibility: The Economics of AI Coding

o3-mini-high is the most cost-efficient with a 200K context length. Claude 3.7 Sonnet offers top-tier accuracy across platforms, while Grok 3 remains exclusive to X users.

The table below provides a detailed pricing and availability comparison:

Now that we’ve covered cost and availability, it’s time to think about which model fits your specific needs. Let’s help you figure out the best choice for your projects and workflow.

Which Model Is Right For You?

Choosing the optimal AI coding assistant depends on your specific use case, budget constraints, and development priorities. Each model serves different developer needs and organizational requirements.

Choose Claude 3.7 Sonnet if:

- You're working on complex, multi-step coding projects that benefit from agentic capabilities

- Front-end web development is a primary focus of your work

- You value transparency in AI reasoning and want to observe the thinking process

- Your organization can justify higher token costs for superior coding task performance

Choose Grok 3 if:

- Mathematical and algorithmic problem-solving is central to your applications

- You're developing applications that benefit from real-time information retrieval

- Visual/multimodal capabilities are important for your use case

- You're already invested in the X/Twitter ecosystem for your applications

- Budget transparency is less important than cutting-edge mathematical reasoning

Choose o3-mini-high if:

- Cost efficiency is a primary concern for your project or organization

- You need consistent performance across STEM-related coding tasks

- Your applications require adjustable reasoning effort based on task complexity

- You prefer transparent, usage-based pricing for budget planning

- You're building production systems that need to scale cost-effectively

Which Model Works Best for Different Use Cases?

Solo Developers vs. Enterprise-Level Needs

Strengths in Different Programming Languages

Final Thoughts!

Claude 3.7 Sonnet, Grok 3, and o3-mini-high each bring strong strengths suited to different coding needs. The bottom line is:

- For enterprise-grade code and workflow automation, go with Claude Sonnet.

- For real-time knowledge and math, use Grok 3.

- For cost-sensitive, scalable general use, choose o3-mini-high.

As these models evolve, trying each will help developers find what fits their workflow best. The ongoing competition between Anthropic, xAI, and OpenAI will continue pushing these tools forward, benefiting everyone who codes.

Nurix AI specializes in building custom AI agents that integrate smoothly with enterprise workflows, boosting productivity and streamlining coding and support tasks.

Take advantage of:

- Advanced agentic workflows for complex task automation

- Adjustable reasoning levels to balance performance and cost

- Real-time problem-solving capabilities that accelerate development

Nurix AI agents can be customized to work alongside leading coding models like Claude 3.7 Sonnet, Grok 3, and o3-mini-high, helping your team choose, deploy, and maximize the right AI for your needs.

Improve code quality, reduce debugging time, and maintain enterprise-grade standards with Nurix AI’s human-in-the-loop and context-aware solutions. Get in touch with us!

FAQs:

1. Which model is best for resource-constrained environments or edge computing?

o3-mini-high is the preferred choice for resource-constrained settings, as it requires less compute power and offers faster, cost-efficient inference compared to Claude 3.7 Sonnet and Grok 3.

2. How do these models handle code security and privacy in enterprise applications?

Claude 3.7 Sonnet provides transparent explanations and built-in safeguards for compliance and privacy, while o3-mini-high and Grok 3 rely on standard security protocols; none should be considered a drop-in replacement for secure, audited human review in sensitive deployments.

3. Do these models support multimodal (image or audio) prompts in their current versions?

As of mid-2025, Grok 3 and Claude 3.7 Sonnet have some capabilities for image understanding, but o3-mini-high is focused primarily on text/code and lacks native support for multimodal prompts.

4. How do real-time performance and latency compare among these models for live coding assistance?

o3-mini-high generally responds fastest due to lightweight architecture; Claude 3.7 Sonnet provides both quick and “think-aloud” modes, while Grok 3 can experience higher latency when its stepwise “Think mode” is activated.

5. For teams needing long-context or document processing, which model offers the best support?

Claude 3.7 Sonnet and Grok 3 both handle extended context windows (up to 200K tokens), making them ideal for large codebases or documents, while o3-mini-high, though efficient, has a practical context limit and may truncate very long inputs.