Nex by Nurix | ASR Episode 22 - Rashi

For many enterprises, the challenge isn’t deploying voice AI, but getting it to work reliably once it’s live.

Customers call with urgent needs. Agents rely on fast, accurate systems. But somewhere between speech and response, things start to fall apart.

The culprit? More often than not, it’s the Automatic Speech Recognition (ASR) layer.

Despite powering everything from virtual agents to post-call analytics, most ASR systems struggle under pressure. Accents, background noise, interruptions. These real-world variables overwhelm off-the-shelf models that were never built for such complexity.

In Episode 22 of Nex by Nurix, Rashi unpacks the limitations of traditional ASR systems and explains how Nurix rebuilt its voice pipeline to deliver context-aware, enterprise-grade recognition that works where it matters most—in the wild.

Here’s what modern ASR needs to succeed, why most systems fail, and how Nurix’s re-engineered stack is setting a new bar for enterprise performance.

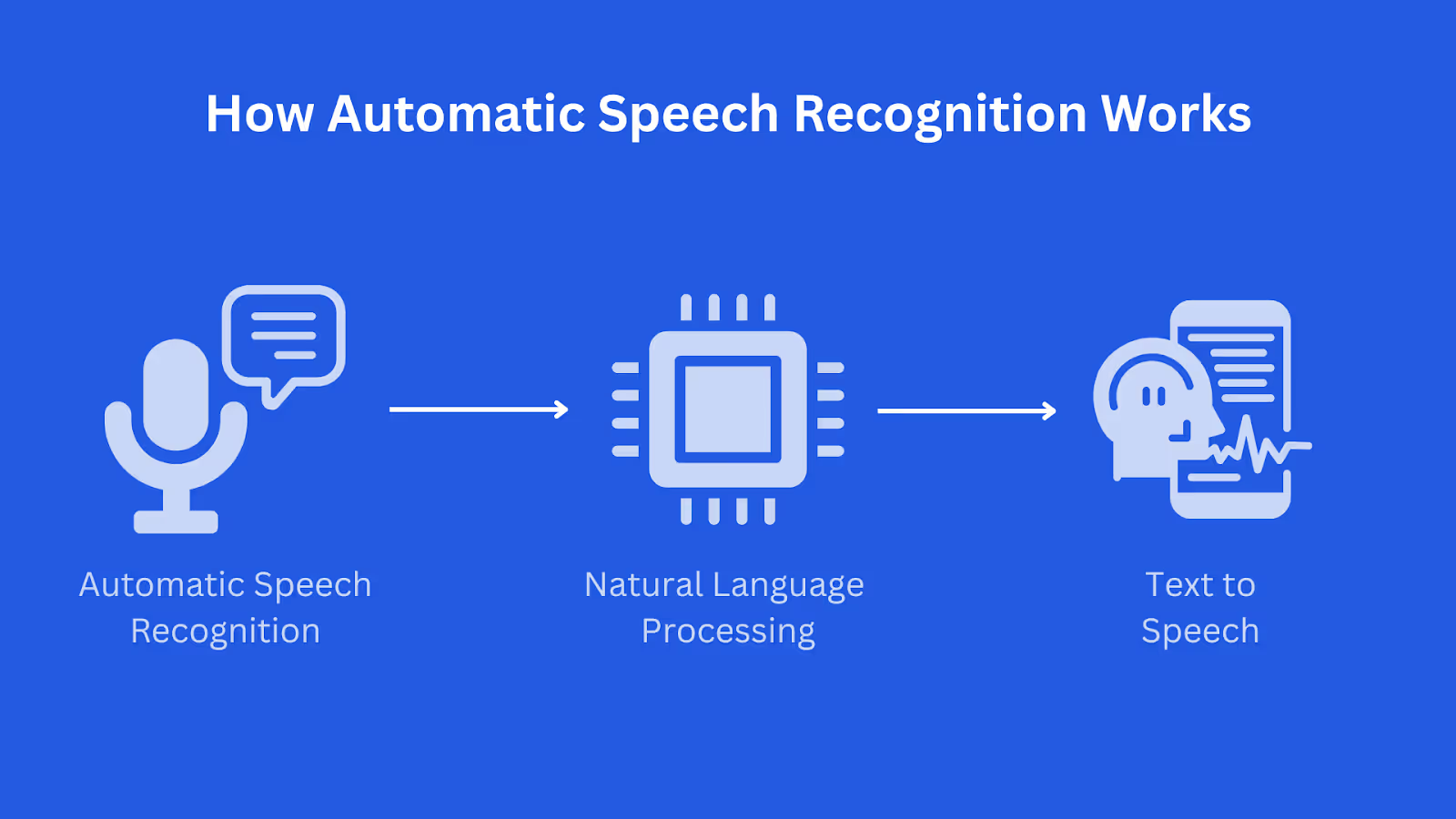

How does Automatic Speech Recognition (ASR) Work?

A robust ASR system goes far beyond transcription. To handle real-world enterprise needs, it must include:

1. Speech-to-Text (STT)

This is the foundation. But poor transcription quality has a cascading effect—leading to missed intent, irrelevant responses, and user frustration.

According to McKinsey, a 5% drop in STT accuracy can lower CSAT scores by as much as 17%. Clean input isn’t optional—it’s critical.

2. Diarization

Speaker separation ensures the system knows who’s speaking and when. Without this, multi-speaker transcripts turn into guesswork, especially in customer support, where context is everything.

3. Sentiment and Emotion Detection

It’s not just about what was said—but how. Tone, hesitation, stress, and emphasis all offer signals that can help the system adapt in real time.

In our internal testing, layering sentiment cues improved resolution accuracy by 28%.

4. Redaction

Accurate ASR must recognize and remove sensitive data—credit card numbers, addresses, IDs—whether spoken clearly or repeated hesitantly. Compliance depends on it.

Automatic Speech Recognition Powers Everyday Interactions

ASR operates behind the scenes in almost every voice-enabled system:

- Voice-Based Customer Support

ASR converts speech to structured data so AI agents can understand, act, and resolve. - Live Captioning and Accessibility

From video calls to webinars, ASR turns live speech into readable content—improving accessibility and searchability. - Post-Call QA and Analytics

Transcripts help quality teams measure sentiment, spot red flags, and ensure agents follow SOPs. - Media and Content Monitoring

ASR can scan podcasts, news clips, and voice feeds in real time—flagging risky or non-compliant content before it spreads

Why Most ASR Tools Don’t Survive the Real World

Off-the-shelf systems often perform well in demos: single speaker, studio-quality audio, standard English.

But real conversations are unpredictable.

- A user mixes English with regional language.

- Two speakers talk at once.

- Background noise makes half the words inaudible.

This is where most ASR systems fail:

- Diarization can’t keep up with interruptions.

- Sentiment models detect only basic moods and miss context cues.

- Redaction fails when data is mumbled or repeated.

A 2024 AssemblyAI study found that 27% of systems missed redaction opportunities when callers didn’t say sensitive data clearly the first time.

The Nurix Approach: ASR Built for Enterprise Chaos

Rather than rely on legacy systems, Nurix engineered its ASR pipeline from scratch, purpose-built for complexity.

1. Custom STT Engine

Trained on noisy, multilingual, real-world audio—our model maintains over 95% accuracy even in difficult environments.

It doesn’t just tolerate background noise or regional accents—it’s optimized for them.

2. X-Vectors for Diarization

We use speaker embedding techniques to assign unique voiceprints to each speaker. This allows the system to separate and track individuals—even across multiple interactions.

3. ECAPA-TDNN for Voice Clarity

This architecture improves voice separation with enhanced channel attention. It works well in messy, overlapping speech—enabling precise diarization without loss of context.

4. Emotion Intelligence

Instead of labeling emotion with a handful of fixed categories, our models detect hesitation, emphasis, urgency, and more. These cues allow the system to adjust responses dynamically.

5. SLM-Based Redaction

We use small language models trained via distillation on LLM-generated data. These lightweight models recognize sensitive data even when it’s repeated, stuttered, or embedded in noise.

The result: compliance-grade redaction without latency or overuse of compute.

At Nurix, we’ve built ASR to meet the demands of modern enterprise communication: real-time, real-world, and reliably accurate.

Our agents don’t just hear. They listen. Understand. And resolve.

%20Often%20Fails%20in%20Real%20Conversations.avif)