Most AI agents don’t fail because the model is weak. They fail because they’re asked to operate inside workflows and data systems built for humans, not machines. We have created Nuplay for enterprises to build agent-ready environments: deterministic, structured, and engineered for machine logic.

What Happens When Intelligent Automation Meets the Real World?

It’s a pattern most companies recognize. You pilot an AI agent. The demo blows everyone away. The agent zips through workflows, automates tedious tasks, and answers complex questions instantly. It’s fast, accurate, and feels like magic.

Then, reality hits.

Once deployed in production, the same agent begins to falter. It loops back with basic questions. It misroutes tickets. It escalates needlessly. Sometimes, it just stops working.

At first, it’s easy to blame the model.

But as Karthik Viswanathan, on Nex by Nurix, explains: “Agentic AI stumbles when it meets human-shaped workflows and fragmented data. Give the agent clear rule-based steps and clean machine-readable information and it just works. Drop it into guesswork and half-filled fields, it crashes.”

Human-Centric Workflows Aren’t Machine-Ready

Enterprise processes weren’t built for machines. They were built for humans and that’s a critical distinction.

Humans operate on instinct, social cues, and unspoken norms. We know who the manager is, what "good judgment" feels like, and where to find the most recent invoice even if it’s buried in a Slack thread or tucked away in a personal folder.

But AI agents don’t have that intuition. They rely on rules, structure, and defined paths.

Example:

Consider a finance approval flow. A step in the process might say:

“Check with your manager if you are unsure.”

For a human, this is clear. We know who we report to. But an AI agent? It hits a wall. There’s no logic path for “if unsure”, and no system query for “your manager.”

That ambiguity causes the agent to either escalate, timeout, or worst of all guess. And when agents guess in production, trust erodes.

The Hidden Cost of Fragmented Data

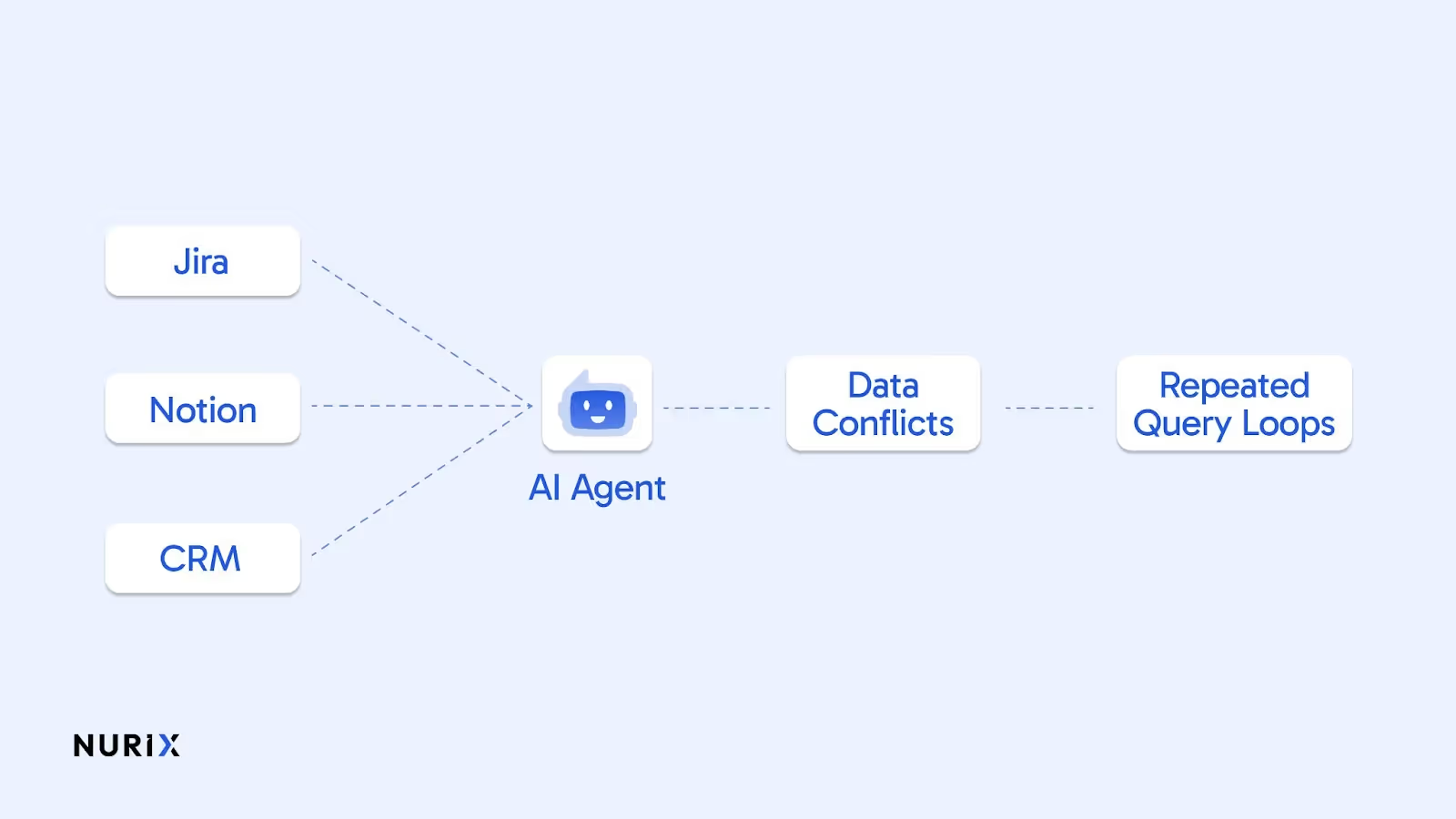

Even when workflows are relatively structured, the underlying data landscape often isn’t. And this fragmentation kills agent performance.

Real-World Examples:

- A Jira ticket is tagged In Progress, but a spreadsheet says it’s Blocked.

- A crucial document lives in Notion, SharePoint, and a personal Google Drive with different versions.

- Acronyms like MRP, MSRP, ASP, and Ex-Factory are tossed around interchangeably with no formal glossary.

- CRMs and ERPs have blank fields like Renewal Intent or Priority, leaving agents with no context to act on.

Agents can technically read all of this but they can’t judge what’s correct.

That’s where things unravel. The agent begins looping, asking for the same clarifications twice, or defaulting to non-action.

When Every Team Builds Its Own Rules

The problem compounds when every team creates its own workarounds.

In the absence of a shared structure, each team builds its own:

- File naming conventions

- Process steps

- Exception handling paths

- Data standards

Soon, a single support ticket might:

- Get reassigned to 4 different people in one day

- Bounce between tools: Jira, Slack, email

- Hit three different process forks depending on which team touches it

For agents, this is like trying to drive through a city where every intersection has different, changing traffic rules.

There’s no clear path. Just ambiguity, forks, and contradictions.

Why Demos Work And Real Deployments Don’t

This brings us back to the original paradox.

In a demo, the environment is clean. The workflows are curated. The inputs are complete and consistent. Every step has a defined path. Ambiguity is removed by design.

In production? None of that holds true.

The agent is dropped into:

- Partially filled fields

- Undefined handoffs

- Multiple systems with conflicting data

- Workflows written with tribal knowledge

When this happens, the impressive AI demo suddenly becomes an expensive operational liability. Teams have to handwrite patchwork logic to cover the gaps. Or worse, they revert to doing the work manually and babysitting the agent.

Over time, AI becomes a burden, not a boost.

Enterprise Impact:

- Morale dips as agents underdeliver

- Leaders lose confidence in AI as hype

- Budgets shift elsewhere

Agentic AI Isn’t Magic, It’s Engineering Precision

An AI agent isn’t a mind reader. It doesn’t make intuitive leaps. It follows logic exactly as written. No more, no less.

When we give it structured data, clear instructions, and deterministic flows, the results are transformative:

- Fewer escalations

- Faster decisions

- Less babysitting

- More trust in automation

But when we drop it into a process built for humans, failure is not only likely, it’s inevitable.

At Nuplay, we ensure the agent works because the environment is designed to support it.

Build the World Agents Can Thrive In

Agentic AI is a leap forward in enterprise automation, but only when deployed thoughtfully.

We can’t expect agents to succeed in environments built for people. We must engineer clarity, eliminate ambiguity, and design with agents in mind from the ground up.

When we do, AI agents don’t just work.

They thrive.

The Real Problem: The Ecosystem, Not the Agent

At Nuplay, we’ve learned this firsthand: agentic AI only works when the environment is agent-ready.

That means designing deterministic, structured, and governed systems where agents can succeed.

Here's what that looks like:

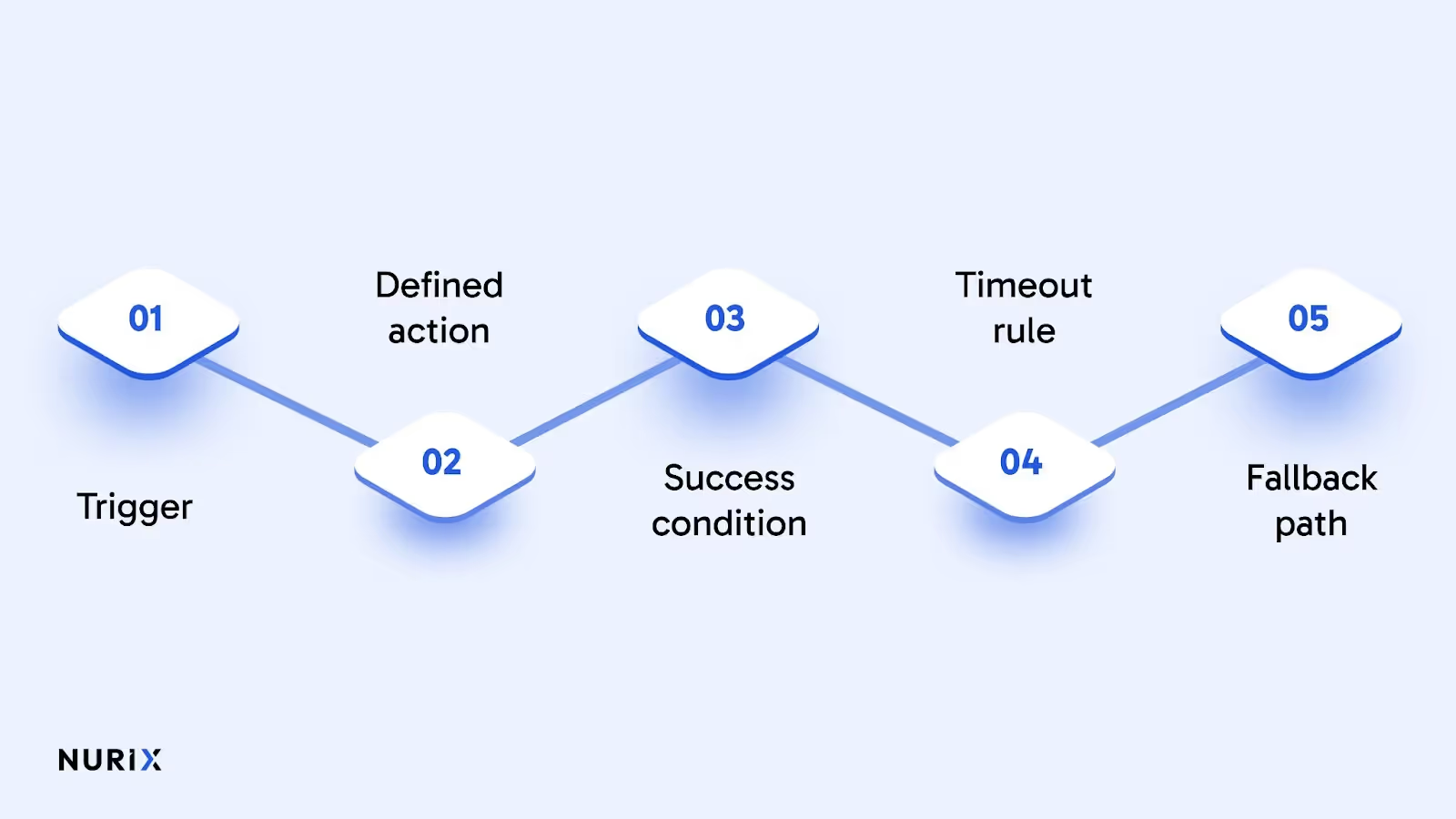

Every Workflow Step Has:

- A trigger

- A defined action

- A success condition

- A timeout rule

- A fallback path

Each Process Includes:

- Review checkpoints where a human can approve or override

- Glossary of approved terms for industry-specific acronyms

- Org-aware escalation paths, so edge cases route correctly up the chain of command

This kind of environment removes ambiguity. It aligns logic with org structure. And it gives agents the confidence to operate without asking for help at every step.

For organizations ready to move from insight to action, Nuplay offers proven, enterprise-ready solutions to help you turn the potential of AI conversational technology into real business results. Discover how Nuplay can work for your organization today. Get in touch with us!