Multimodal AI Agents: Architecture and Key Applications

Ever sent a message that lacked context, leaving someone puzzled? Now, imagine AI facing similar confusion, processing disjointed snippets of text or isolated images without the ability to tie them together. This gap often delays AI systems from fully understanding the richness of human communication, where meaning extends beyond words alone.

Multimodal AI agents address this challenge by working with diverse data types, text, visuals, sound, and more, to create a layered comprehension of information. These agents synthesize inputs across multiple sensory channels, allowing AI to make decisions or interact in ways that echo real-world complexity, bringing nuance to machine-driven understanding.

In this guide, the architecture behind multimodal AI agents will be examined, shedding light on how they combine distinct data streams to act intelligently.

Takeaways

- Cross-Modal Alignment Shapes Agent Trust: Synchronizing diverse data types accurately is critical, influencing not just performance but how users trust and adopt multimodal AI agents.

- Real-Time Processing Requires Trade-Offs: Latency and computational load force a careful balance between speed and accuracy, especially for live interactions in resource-limited environments.

- Dataset Annotation Is a Hidden Bottleneck: Labeling multimodal data demands precise synchronization across modalities, increasing costs and complexity well beyond single-data annotations.

- Broader Attack Surface Risks Security: Multiple input channels expand vulnerability, necessitating new defense strategies to protect against adversarial manipulation in any one modality.

- Nurix AI Combines Precision with Scalability: Nurix AI’s platform offers advanced multimodal capabilities integrated into enterprise workflows, driving higher automation rates and customer satisfaction.

What Are Multimodal AI Agents and Why Do They Matter?

Multimodal AI agents process and integrate text, images, audio, video, and sensor data within one system, enabling richer context, smarter decisions, and more natural interactions. By combining multiple inputs, like analysing text, tone, and visuals simultaneously, they exceed reactive responses to deliver proactive, comprehensive solutions.

Why Multimodal AI Agents Matter

- Improved Decision-Making Ability: Multimodal AI agents provide deeper context by synthesizing information from different sources, leading to more accurate, holistic, and reliable decisions across domains like healthcare and manufacturing.

- Human-Like Interaction: These AI agents support multiple communication styles, text, voice, and images, making interactions more intuitive, natural, and engaging for users, reducing friction compared to single-input systems.

- Versatility Across Tasks: They adapt to a broad range of applications, handling various types of data without needing separate systems, enabling one agent to perform complex, cross-modal tasks effectively.

- Operational Streamlining: By automating workflows that require multi-step processing of varied data, multimodal agents reduce dependence on disparate specialist tools and human coordination, saving time and effort.

- Improved Strength: Cross-validation from multiple input types helps minimize errors caused by poor or noisy data in one modality, increasing reliability in real-world usage.

A Reddit user on r/AI_Agents discussing multi-agent AI systems noted the appeal of having specialized AI agents for different tasks to improve automation efficiency. They highlighted that splitting complex workflows into modular agents, for example, separate experts for web searching, data analysis, and database updates, makes systems easier to manage and more effective than a monolithic AI.

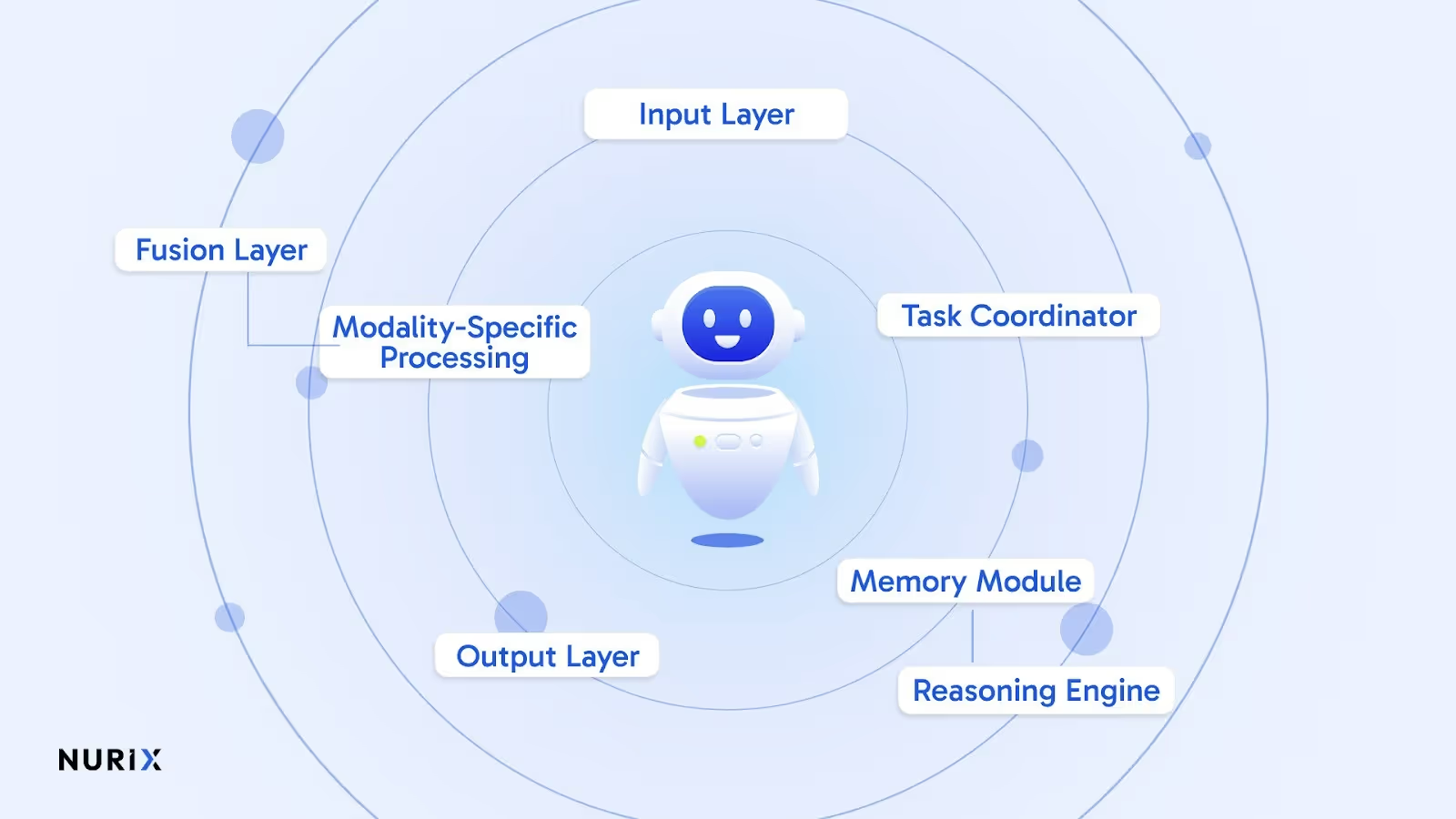

Inside the Architecture of Multimodal AI Agents

Multimodal AI agents process various inputs at the same time, giving them the ability to respond with richer context than any single source could provide. The architecture behind this involves several layers working together to balance and interpret complex information flows. Below are the main components that drive this interaction.

- Input Layer: The agent captures diverse data types, text, images, audio, and video, collecting a wide-ranging context that simultaneously reflects user needs or environmental cues for richer understanding.

- Modality-Specific Processing: Dedicated models analyze each input type separately, such as language models for text, vision networks for images, and speech recognition frameworks for audio, extracting relevant features efficiently.

- Fusion Layer: This component links processed data to form a single, unified view, using attention techniques that assess the relative importance of each modality in the ongoing interaction.

- Reasoning Engine: Combining fused data, this core AI component interprets meaning, makes decisions, and plans responses, employing algorithms that balance inputs to generate meaningful, context-aware outcomes.

- Memory Module: Short- and long-term memory stores contextual history, allowing the agent to maintain continuity across exchanges and refine responses based on past data and ongoing feedback.

- Planner and Task Coordinator: This subsystem organizes complex user requests into actionable steps, orchestrating how tasks progress and managing priorities for effective execution by the agent.

- Output Layer: The agent responds naturally by producing text, speech, images, or mixed media, matching user preferences and the conversation flow for an intuitive communication experience.

Explore the shift in customer engagement and revenue growth in this deep look at How AI Agents are changing Sales Forever.

Top Applications of Multimodal AI Agents

Multimodal AI agents process different types of information simultaneously, opening new potential where multiple data streams matter. Their growing presence spans sectors where combining inputs leads to smarter outcomes. Below are some notable ways these agents are being applied today.

1. Healthcare Diagnostics and Medical Decision Support

Multimodal AI agents analyze medical imaging, patient records, and lab results simultaneously to provide comprehensive diagnostic support. These systems combine CT scans, pathology reports, genomic data, and blood tests to deliver more accurate diagnoses than single-source approaches.

How it benefits businesses:

- Diagnostic Precision: Systems reduce diagnostic errors by cross-referencing multiple data sources, with multimodal models achieving high accuracy across 600+ diagnoses.

- Predictive Capabilities: Early detection of disease progression through pattern recognition across imaging, lab values, and clinical notes.

- Operational Efficiency: Real-time analysis streamlines workflows and reduces time-to-treatment, supporting faster medical interventions.

2. Manufacturing Quality Control and Predictive Maintenance

Multimodal AI agents monitor production lines using cameras, acoustic sensors, vibration detectors, and thermal imaging to identify defects and predict equipment failures. Systems analyze visual surface quality, machine sounds, temperature patterns, and pressure readings simultaneously.

How it benefits businesses:

- Real-Time Detection: Immediate identification of quality issues with 35-50% improved prediction accuracy over traditional systems.

- Cost Reduction: Prevention of equipment failures through predictive maintenance, reducing scrap rates and production downtime.

- Process Optimization: Continuous monitoring enables dynamic recalibration of quality thresholds, minimizing waste and rework.

3. Customer Service Automation and Support

Multimodal AI agents process text inquiries, voice tone analysis, uploaded images, and video submissions to provide comprehensive customer support. These systems analyze customer sentiment, product photos, and interaction history to deliver contextual responses.

How it benefits businesses:

- Resolution Speed: Automated triaging and context-aware responses reduce average resolution times from hours to minutes.

- Service Quality: 97% of communications service providers report positive customer satisfaction impact from conversational AI implementations.

- Operational Scale: Unified systems handle multiple communication channels without requiring specialized teams for different problem types.

4. Content Creation and Marketing Automation

Multimodal AI agents generate marketing materials by combining brand guidelines, visual assets, audio elements, and text to produce cohesive campaigns across formats. Systems analyze audience preferences across multiple touchpoints to create personalized content.

How it benefits businesses:

- Production Speed: Quick content generation eliminates time-consuming tool switching and coordination between different media types.

- Brand Consistency: Cooperative systems maintain consistent messaging and visual identity across text, images, and video content.

- Personalization Scale: Analysis of customer behavior, voice patterns, and visual engagement enables hyper-targeted campaigns.

5. Retail Personalization and Inventory Management

Multimodal AI agents analyze browsing behavior, purchase history, voice searches, and visual product interactions to deliver personalized shopping experiences. These systems combine customer data with real-time inventory monitoring through shelf cameras and sensors.

How it benefits businesses:

- Revenue Growth: Personalized recommendations and targeted offers increase conversion rates through behavior-based product suggestions and customer satisfaction.

- Inventory Optimization: Real-time shelf monitoring and demand prediction reduce stockouts while minimizing overstock situations.

- Customer Engagement: Cross-channel personalization creates consistent experiences whether customers shop online, in-store, or via mobile platforms.

6. Intelligent Virtual Assistants and Interface Design

Multimodal AI agents process voice commands, gestures, facial expressions, and screen context to create more natural human-computer interactions. Systems combine automatic speech recognition with visual scene analysis for context-aware responses.

How it benefits businesses:

- User Experience: Natural interaction through multiple input methods increases user engagement and system adoption rates.

- Accessibility Expansion: Support for voice, gesture, and visual inputs makes systems accessible to users with different abilities and preferences.

- Operational Efficiency: Context-aware assistance reduces training time and user errors through intuitive interface interactions.

Challenges When Developing Multimodal AI Agents

Multimodal AI agents work with a mix of data types, which brings its own set of difficulties. Balancing diverse inputs while keeping performance reliable can test both design and resources. The following points highlight some of the common hurdles encountered in building these systems.

- Data Alignment Complexity: Synchronizing multiple data types like text, images, and audio is technically demanding, requiring precise matching across modalities to build coherent and meaningful input representations.

- High Computational Demands: Processing and integrating diverse modalities require significant computing power and memory, pushing the limits of available hardware and increasing development and operational costs.

- Contradictory Input Signals: Multimodal data can sometimes send conflicting cues, forcing the agent to intelligently resolve ambiguity without sacrificing response accuracy or fluidity in interaction.

- Real-Time Processing Bottlenecks: Balancing speed and accuracy when handling multiple data streams simultaneously challenges the system’s ability to deliver timely responses in interactive or live scenarios.

- Interpretability Difficulties: Layers of fused multimodal data make understanding an agent’s internal decision-making harder, complicating debugging, trust building, and validation efforts.

- Training Data Scarcity and Quality: Acquiring large, balanced datasets spanning all relevant modalities is tough; poor quality or biased data can undermine effectiveness and fairness in diverse real-world tasks.

- Model Selection Trade-offs: Choosing between large unified models versus modular specialized agents affects scalability, performance, and complexity, requiring careful assessment of project goals and constraints.

- Privacy and Ethical Concerns: Handling sensitive audio, visual, and text data raises challenges around user consent, data security, misuse risks, and regulatory compliance that must be proactively managed.

Gain clarity on automation strategies and practical applications in this guide on What are AI Agent Workflows? Top Use Cases With Examples.

How Nurix AI Improves CX With Multimodal AI Agents

Nurix AI is an enterprise-focused platform that creates custom multimodal AI agents designed to enhance customer experience and operational efficiency. Their conversational AI solutions automate repetitive tasks in customer support and sales, offering a human-like voice and chat interactions 24/7.

Nurix AI agents integrate easily with existing systems like CRM, enabling smooth, consistent conversations across all channels, helping businesses reduce costs and increase customer satisfaction.

- Conversational AI Agents for Always-On Support: Automate repetitive queries and resolve tickets instantly with human-like voice agents, delivering reliable customer service round-the-clock across voice and chat channels.

- Omnichannel Customer Engagement: Engage customers naturally wherever they are, website, app, SMS, or social media, supporting voice or typed communication with unified interaction history and context continuity.

- Smart Escalation to Human Agents: Smoothly transfer complex interactions with conversation summaries and alerts, preserving context and accelerating resolution times for cases requiring human intervention.

- Unified Insights Dashboard: Consolidate voice and chat transcripts into real-time analytics on customer sentiment, CSAT, and operational bottlenecks, empowering continuous support improvements driven by data.

- Effortless System Integration: Deploy quickly with pre-built connectors and flexible APIs that integrate Nurix AI agents into existing enterprise workflows without disruption or heavy development overhead.

The Future of Multimodal AI Agents

Multimodal AI agents are stepping into wider use as businesses commit more resources and real-world results start to show. Beyond the hype, measurable growth and clear ROI underline where these systems are moving next. Here’s a look at what the near future holds.

- Documented Market Growth: The global multimodal AI market is valued at over $1.6 billion in 2024 and projected to reach $42.38 billion by 2034 with a CAGR above 30%.

- Commercial Deployments: Financial, healthcare, manufacturing, and retail sectors have active multimodal AI systems improving customer service, diagnostic accuracy, and quality control today.

- Real Investment Activity: Over $250 million in recent investments from global institutions and private funding signals commercial momentum for multimodal AI platforms in 2025.

- Verified Business Results: Enterprises report ROI improvements over 100% and measurable gains in defect reduction, patient triaging speed, and user experience with multimodal AI agents.

Final Thoughts!

Many may overlook how the complexity of synchronizing diverse data types itself shapes the future of multimodal AI agents. This challenge exceeds technical hurdles; it influences how these agents will gain trust and adoption across real-world scenarios where accuracy and contextual understanding matter most.

At Nurix AI, we specialize in developing multimodal AI agents designed to handle such complexities with precision. Our platform offers key features that include:

- Advanced synchronization algorithms to align text, audio, and visual inputs accurately

- Scalable architecture built for efficient resource management across modalities

- Real-time context adaptation for responsive, relevant interactions

- Ethical data handling protocols to maintain privacy and reduce bias

Connect with us to discuss how Nurix AI’s multimodal agent solutions can support your business needs and accelerate intelligent automation efforts.

Multimodal AI agents apply specialized cross-modal alignment strategies and attention mechanisms to weigh and reconcile contradictory signals, ensuring balanced decision-making without one modality overshadowing others.

Processing multiple data streams like video, audio, and text simultaneously introduces latency and high computational demand, often requiring trade-offs between speed and accuracy, especially on resource-constrained devices.

Multimodal annotation involves synchronizing and aligning diverse data types with temporal and contextual precision, significantly increasing labeling effort, cost, and risk of misalignment that can degrade model performance.

With multiple input points, multimodal agents face wider attack surfaces where manipulated data in any modality can deceive or corrupt the overall decision process, making unified defense mechanisms a challenging area of research.

Behind the scenes, these agents demand high-performance infrastructure, multidisciplinary expertise, extended training times, and continuous data pipeline management, often doubling expenses relative to traditional AI systems.